What does all this actually mean in practice? What can, or should, organizations from enterprises to governments do, not do, or do differently?

That is a question that, obviously, cannot be comprehensively answered for your organization on a site like this; it requires a more comprehensive project or ongoing governance changes to align with the mindful automation principles, although just mere awareness can and does create behavior change.

There are at least six domains that will need to be taken into account. This is a work in progress and more detailed frameworks will come available here at a later date, but the areas or lenses and some useful tools particularly for the resilience engineering section are here now. There are a number of perspectives or lenses that mindful automation needs to be looked at.

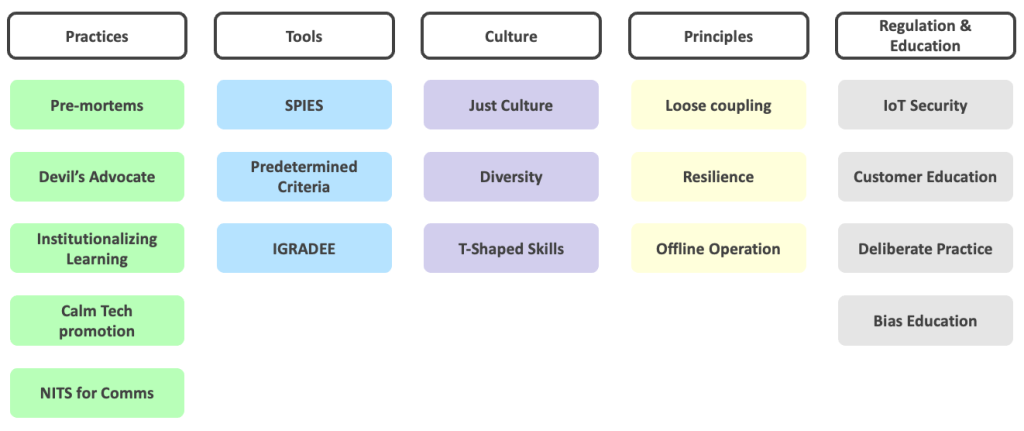

The below diagram is what could be considered version 0.2 of the Mindful Automation framework; it is a living document and will be revised to better align with the below components.

The key components are:

Social Impact Perspective

Consider the impact of automation on society, particularly on roles, inequality, and accessibility. Focus on developing solutions that bridge the digital divide and promote inclusivity. Educate businesses and users on the responsible use of automation, emphasizing the importance of balancing technological advancements with social well-being. When implementing automated solutions, analyze the downstream impacts and externalities and be transparent about the results.

Engineering Perspective

Ensure that the tools and platforms you provide are reliable, resilient, scalable, and efficient, and that they do not unnecessarily contribute to adding technological debt. From a Mindful Automation perspective, a key principle is resilience; building resilient systems is a large sub-topic in its own right and includes multiple elements from other perspectives and disciplines.

Contact Transition Level for more details on this.

Ethics Perspective

Inquire and analyze the ethical implications of automation, addressing potential biases, job displacement, and privacy concerns. Ensure that your products and services are designed with fairness, accountability, and transparency in mind. Encourage collaboration with ethics experts and integrate ethical guidelines into the development process.

This may or may not include using an Ethical AI or Responsible AI framework, a large number of which are now available globally from governments and other organizations.

Environmental Perspective

Sustainability is not an optional feature, but must be considered from the very beginning. The low-hanging fruits are considerations of energy and materials use by automation, and resulting emissions and waste handling. Minimizing these impacts and participating in the Circular Economy to the extent possible is critical, but not sufficient.

Efficient technological solutions have in the past also often resulted in Jevon’s Paradox; considering whether that is possible in any solution should be included in initial studies.

Education and Career Perspective

Internally, when automating even a part of the role, people who will be impacted need to be not only consulted, but supported prior to any changes. Assurances that support will be offered later are not sufficient; people need to see a clear path how their role will be impacted and what support will be offered – re-training needs to happen at current compensation levels, and pathways to preferably better-paid roles offered with assurances.

If employees do not have confidence that they will be taken care of in the transition to automation, they will resist or at a minimum will suffer from lack of motivation. On the other hand, when they can see how it impacts them positively, they can become advocates for disrupting their own roles.

For the indirectly impacted people or the public, develop educational resources and training programs to help users understand the benefits and potential risks of automation. Promote digital literacy and empower users to make informed decisions about the adoption and use of automation technologies.

Legal Perspective

Ensure that any solutions comply with relevant laws and regulations, including data protection, privacy, and intellectual property. Collaborate with legal experts to navigate the complex regulatory landscape and mitigate potential risks. This should arguably be a self-evident point, but it is not – for hiring algorithms, for example, bias is not simply automated bias (which would be bad enough), but a very clear-cut illegal activity in most countries. Ethical AI frameworks too often focus on “soft” forms of tweaking for activities that might clearly be illegal if examined with the legal lens,

Beyond the “obvious”, there are legal liability considerations to take into account. Depending on how our automated system is implemented, do our concepts of accountability and responsibility need to be overhauled? Is there a risk we might employ people as the moral crumple zone?

Design Perspective

Focus on creating automation solutions that are informed by human factors on a very fundamental level. Adopt a human-centered design approach that prioritizes user experience, safety, and accommodates different levels of technological literacy and ensures proper training where that cannot be accommodated. Sometimes, adding an element of automation can dramatically alter the role profile; principally, moving from an actor to one of a supervisor, which can have far-reaching consequences.

Economic Perspective

This should also be a self-evident point, but in the race to automate and implement AI, holistic ROI models and follow-up are sometimes lacking. Many a system is automated with a narrow view of profitability, ignoring longer-term costs and externalities (which might still be internalized by other parts of the same organization).

Stakeholder Perspective

Engage with a diverse set of stakeholders, including customers, employees, industry partners, government, and the wider public, to understand their needs and concerns. Establish open channels of communication and foster collaboration to ensure that your automation solutions address the diverse needs of all stakeholders. This cannot become a PR exercise, but a genuine two-directional dialogue with stakeholders.

Cultural / Global Perspective

This lens, as many others, should permeate all steps in the process from the very beginning and inform everything from initial solutions design to deployment. There are many types of organization cultures, but from a safety perspective, a Just Culture is one of the best ones to strive to adopt.

Incorporating cultural and global perspectives helps ensure the development of well-rounded, inclusive, and adaptable solutions. Different cultures bring unique insights, knowledge, and experiences that can contribute to a more comprehensive understanding of the challenges and opportunities posed by automation.

Each cultural and geographical context will also have unique requirements, preferences, and constraints. By considering these factors, mindful automation can be tailored to meet the specific needs of different communities, ensuring that the solutions are effective and relevant in diverse settings.

Diversity is key to this, and must go far beyond the traditional lens of gender diversity.